Meta's AI Headset for Military Training: A Brave New World or Ethical Minefield?

Meta, the tech giant formerly known as Facebook, is venturing into uncharted territory – military technology. In a move sparking both excitement and concern, the company is collaborating to develop an AI-powered headset designed to train members of the armed forces. This development raises critical questions about the role of civilian technology companies in defense and the potential implications for the future of warfare.

The Project: Immersive Training with AI

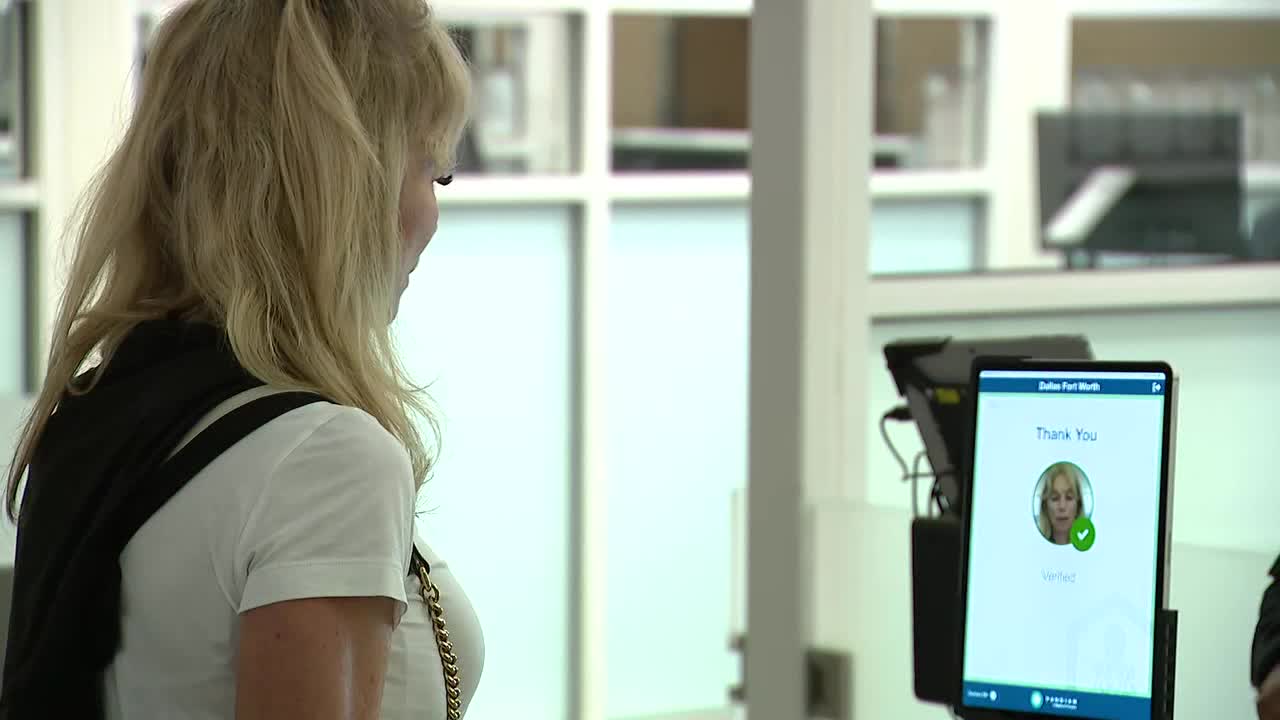

The headset, reportedly being developed in partnership with undisclosed defense contractors, leverages Meta's expertise in virtual and augmented reality. It aims to create highly realistic and immersive training simulations for soldiers, allowing them to hone their skills in a safe and controlled environment. The AI component is crucial, providing adaptive scenarios and personalized feedback to enhance the learning experience. Imagine soldiers practicing battlefield tactics, urban warfare strategies, or even de-escalation techniques, all within a virtual world that responds to their actions in real-time.

Why Meta? The Power of VR/AR Expertise

Why would Meta, a company synonymous with social media, be involved in military technology? The answer lies in its pioneering work in virtual and augmented reality. Meta has invested billions of dollars in developing advanced VR/AR hardware and software, including the Quest line of headsets. This expertise is highly valuable for military training, which increasingly relies on immersive simulations to prepare soldiers for the complexities of modern combat.

The Ethical Debate: A Growing Concern

However, Meta’s foray into military technology isn’t without controversy. Critics argue that it blurs the lines between civilian innovation and military applications, raising ethical concerns about the potential misuse of AI and VR technology. Some worry that Meta's involvement could normalize the use of advanced technology in warfare and contribute to an arms race. The company's past controversies regarding data privacy and the spread of misinformation further fuel these concerns.

“It’s a slippery slope,” says Dr. Anya Sharma, a professor of ethics and technology at Stanford University. “Once a company starts providing technology to the military, it becomes increasingly difficult to control how that technology is used. The potential for unintended consequences is significant.”

Meta’s Response: Focused on Training, Not Weaponization

Meta has attempted to address these concerns, stating that its involvement is strictly limited to training and simulation purposes. The company maintains that it is not developing weapons or technologies that could directly harm individuals. However, this distinction is not always clear-cut, as training simulations can be highly realistic and potentially desensitize individuals to the consequences of violence.

The Future of Military Technology: A Convergence of Worlds

Meta’s project highlights a growing trend: the convergence of civilian technology and military applications. As technology advances, the lines between these two worlds are becoming increasingly blurred. This trend presents both opportunities and challenges. On the one hand, it can lead to more effective and humane training methods. On the other hand, it raises profound ethical questions that society must grapple with. The development of Meta’s AI headset is just the beginning of what promises to be a complex and evolving landscape.

Ultimately, the success of this venture – and the broader trend of civilian tech companies entering the defense sector – will depend on careful consideration of the ethical implications and a commitment to responsible innovation. The world is watching closely to see how Meta navigates this challenging new frontier.

:max_bytes(150000):strip_icc()/GettyImages-1162572891-e54318a376044b30b61cef216f8002ca.jpg)